|

MBDyn-1.7.3

|

|

MBDyn-1.7.3

|

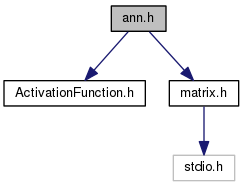

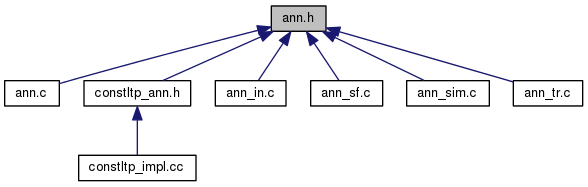

Go to the source code of this file.

Classes | |

| struct | ANN |

Macros | |

| #define | ANN_W_A_NONE (0x0U) |

| #define | ANN_W_A_TEXT (0x1U) |

| #define | ANN_W_A_BIN (0x2U) |

| #define | ANN_FEEDBACK_NONE (0x0U) |

| #define | ANN_FEEDBACK_UPDATE (0x1U) |

Typedefs | |

| typedef matrix * | ANN_vector_matrix |

| typedef vector * | ANN_vector_vector |

| typedef struct ANN | ANN |

Enumerations | |

| enum | ann_res_t { ANN_OK = 0, ANN_NO_MEMORY, ANN_NO_FILE, ANN_DATA_ERROR, ANN_MATRIX_ERROR, ANN_GEN_ERROR } |

| enum | ann_training_mode_t { ANN_TM_BATCH, ANN_TM_SEQUENTIAL } |

Functions | |

| ann_res_t | ANN_init (ANN *, const char *) |

| ann_res_t | ANN_destroy (ANN *) |

| ann_res_t | ANN_write (ANN *, FILE *, unsigned) |

| ann_res_t | ANN_sim (ANN *, vector *, vector *, unsigned) |

| ann_res_t | ANN_DataRead (matrix *, int *, char *) |

| ann_res_t | ANN_DataWrite (matrix *, char *) |

| double | ANN_InternalFunction (double, ANN *) |

| double | ANN_InternalFunctionDer (double, ANN *) |

| ann_res_t | ANN_vector_matrix_init (ANN_vector_matrix *, int *, int) |

| ann_res_t | ANN_vector_vector_init (ANN_vector_vector *, int *, int) |

| ann_res_t | ANN_dEdW (ANN *, vector *) |

| ann_res_t | ANN_dXdW (ANN *, int, int, int) |

| ann_res_t | ANN_WeightUpdate (ANN *, ANN_vector_matrix, double) |

| ann_res_t | ANN_TrainingEpoch (ANN *, matrix *, matrix *, matrix *, int, ann_training_mode_t) |

| ann_res_t | ANN_reset (ANN *) |

| ann_res_t | ANN_TotalError (matrix *, matrix *, double *) |

| ann_res_t | ANN_vector_matrix_ass (ANN_vector_matrix *, ANN_vector_matrix *, int *, int, double) |

| ann_res_t | ANN_jacobian_matrix (ANN *, matrix *) |

| void | ANN_error (ann_res_t, char *) |

| #define ANN_FEEDBACK_NONE (0x0U) |

Definition at line 51 of file ann.h.

Referenced by AnnElasticConstitutiveLaw< T, Tder >::Update(), and AnnElasticConstitutiveLaw< doublereal, doublereal >::Update().

| #define ANN_FEEDBACK_UPDATE (0x1U) |

Definition at line 52 of file ann.h.

Referenced by AnnElasticConstitutiveLaw< T, Tder >::AfterConvergence(), AnnElasticConstitutiveLaw< doublereal, doublereal >::AfterConvergence(), ANN_sim(), ANN_TrainingEpoch(), and main().

| #define ANN_W_A_BIN (0x2U) |

Definition at line 49 of file ann.h.

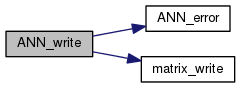

Referenced by ANN_write(), and main().

| #define ANN_W_A_TEXT (0x1U) |

Definition at line 48 of file ann.h.

Referenced by ANN_write(), and main().

| typedef matrix* ANN_vector_matrix |

| typedef vector* ANN_vector_vector |

| enum ann_res_t |

| enum ann_training_mode_t |

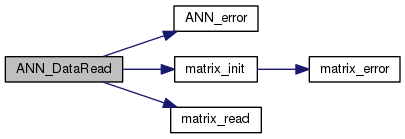

Definition at line 475 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_NO_FILE, ANN_OK, matrix_init(), matrix_read(), and W_M_BIN.

Referenced by main().

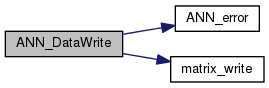

Definition at line 504 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_NO_FILE, ANN_OK, matrix_write(), matrix::Ncolumn, matrix::Nrow, and W_M_BIN.

Referenced by main().

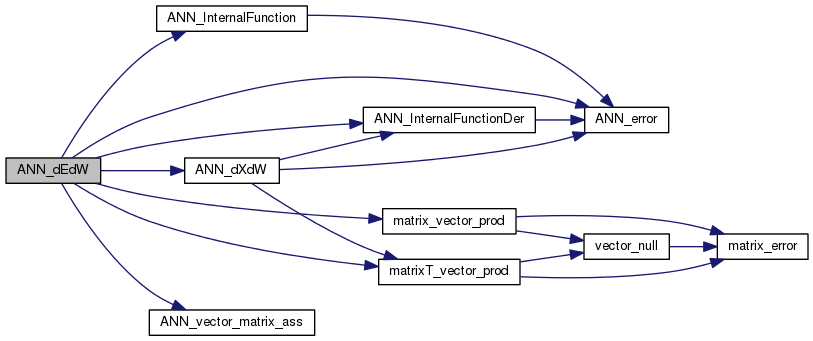

Definition at line 645 of file ann.c.

References ANN_dXdW(), ANN_error(), ANN_GEN_ERROR, ANN_InternalFunction(), ANN_InternalFunctionDer(), ANN_MATRIX_ERROR, ANN_OK, ANN_vector_matrix_ass(), ANN::dEdV, ANN::dEdW, ANN::dXdW, ANN::dy, ANN::dydV, ANN::dydW, ANN::eta, matrix::mat, matrix_vector_prod(), matrixT_vector_prod(), ANN::N_layer, ANN::N_neuron, ANN::N_output, ANN::r, ANN::rho, ANN::temp, ANN::v, vector::vec, ANN::W, and ANN::Y_neuron.

Referenced by ANN_TrainingEpoch().

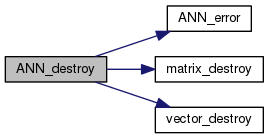

Definition at line 218 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_OK, ANN::dEdV, ANN::dEdW, ANN::dW, ANN::dXdu, ANN::dXdW, ANN::dy, ANN::dydV, ANN::dydW, ANN::error, ANN::input, ANN::input_scale, ANN::jacobian, matrix_destroy(), ANN::N_layer, ANN::N_neuron, ANN::output, ANN::output_scale, ANN::r, ANN::temp, ANN::v, vector_destroy(), ANN::W, ANN::w_destroy, ANN::w_priv, ANN::Y_neuron, and ANN::yD.

Referenced by main(), AnnElasticConstitutiveLaw< T, Tder >::~AnnElasticConstitutiveLaw(), and AnnElasticConstitutiveLaw< doublereal, doublereal >::~AnnElasticConstitutiveLaw().

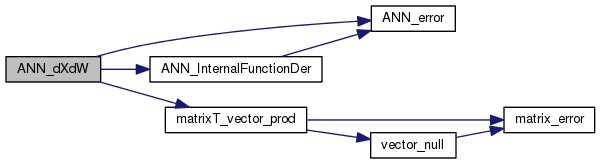

Definition at line 609 of file ann.c.

References ANN_error(), ANN_InternalFunctionDer(), ANN_MATRIX_ERROR, ANN_OK, ANN::dXdW, ANN::dy, matrix::mat, matrixT_vector_prod(), ANN::N_input, ANN::N_layer, ANN::N_neuron, ANN::r, ANN::temp, ANN::v, vector::vec, and ANN::W.

Referenced by ANN_dEdW().

| void ANN_error | ( | ann_res_t | , |

| char * | |||

| ) |

Definition at line 900 of file ann.c.

References ANN_DATA_ERROR, ANN_GEN_ERROR, ANN_MATRIX_ERROR, ANN_NO_FILE, and ANN_NO_MEMORY.

Referenced by ANN_DataRead(), ANN_DataWrite(), ANN_dEdW(), ANN_destroy(), ANN_dXdW(), ANN_init(), ANN_InternalFunction(), ANN_InternalFunctionDer(), ANN_jacobian_matrix(), ANN_reset(), ANN_sim(), ANN_TotalError(), ANN_TrainingEpoch(), ANN_vector_matrix_init(), ANN_vector_vector_init(), ANN_WeightUpdate(), and ANN_write().

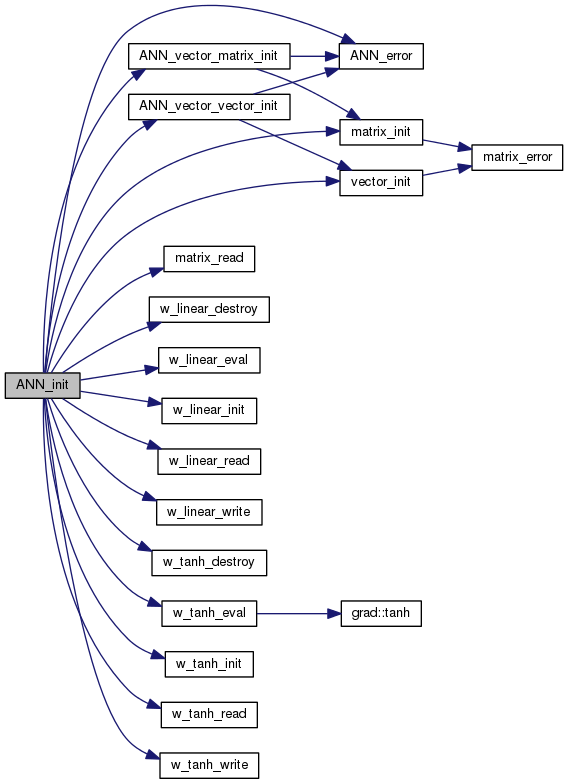

Definition at line 48 of file ann.c.

References ANN_DATA_ERROR, ANN_error(), ANN_MATRIX_ERROR, ANN_NO_FILE, ANN_NO_MEMORY, ANN_OK, ANN_vector_matrix_init(), ANN_vector_vector_init(), ANN::dEdV, ANN::dEdW, ANN::dW, ANN::dXdu, ANN::dXdW, ANN::dy, ANN::dydV, ANN::dydW, ANN::error, ANN::eta, ANN::input, ANN::input_scale, ANN::jacobian, matrix_init(), matrix_read(), ANN::N_input, ANN::N_layer, ANN::N_neuron, ANN::N_output, ANN::output, ANN::output_scale, ANN::r, ANN::rho, ANN::temp, ANN::v, vector_init(), ANN::W, ANN::w_destroy, ANN::w_eval, W_F_NONE, ANN::w_init, w_linear_destroy(), w_linear_eval(), w_linear_init(), w_linear_read(), w_linear_write(), W_M_BIN, ANN::w_priv, ANN::w_read, w_tanh_destroy(), w_tanh_eval(), w_tanh_init(), w_tanh_read(), w_tanh_write(), ANN::w_write, ANN::Y_neuron, and ANN::yD.

Referenced by AnnElasticConstitutiveLaw< T, Tder >::AnnInit(), AnnElasticConstitutiveLaw< doublereal, doublereal >::AnnInit(), and main().

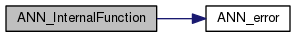

| double ANN_InternalFunction | ( | double | , |

| ANN * | |||

| ) |

Definition at line 526 of file ann.c.

References ANN_error(), ANN_GEN_ERROR, ANN::w_eval, and ANN::w_priv.

Referenced by ANN_dEdW(), and ANN_sim().

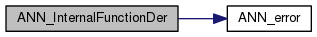

| double ANN_InternalFunctionDer | ( | double | , |

| ANN * | |||

| ) |

Definition at line 540 of file ann.c.

References ANN_error(), ANN_GEN_ERROR, ANN::w_eval, and ANN::w_priv.

Referenced by ANN_dEdW(), ANN_dXdW(), and ANN_jacobian_matrix().

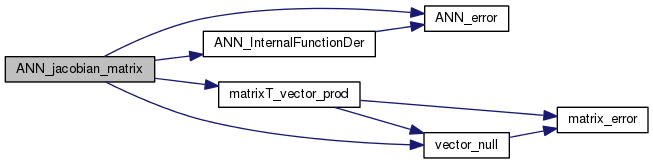

Definition at line 921 of file ann.c.

References ANN_error(), ANN_InternalFunctionDer(), ANN_MATRIX_ERROR, ANN_OK, ANN::dXdu, ANN::input_scale, matrix::mat, matrixT_vector_prod(), ANN::N_input, ANN::N_layer, ANN::N_neuron, ANN::N_output, ANN::output_scale, ANN::temp, ANN::v, vector::vec, vector_null(), and ANN::W.

Referenced by AnnElasticConstitutiveLaw< T, Tder >::Update(), AnnElasticConstitutiveLaw< doublereal, doublereal >::Update(), AnnViscoElasticConstitutiveLaw< T, Tder >::Update(), and AnnViscoElasticConstitutiveLaw< doublereal, doublereal >::Update().

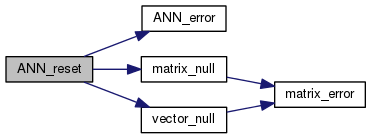

Definition at line 828 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_OK, ANN::dW, ANN::dy, matrix_null(), ANN::N_layer, ANN::N_neuron, ANN::r, vector_null(), and ANN::yD.

Referenced by main().

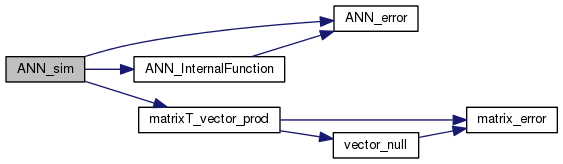

Definition at line 425 of file ann.c.

References ANN_error(), ANN_FEEDBACK_UPDATE, ANN_InternalFunction(), ANN_MATRIX_ERROR, ANN_OK, ANN::input_scale, matrix::mat, matrixT_vector_prod(), ANN::N_input, ANN::N_layer, ANN::N_neuron, ANN::N_output, ANN::output_scale, ANN::r, ANN::v, vector::vec, ANN::W, ANN::Y_neuron, and ANN::yD.

Referenced by ANN_TrainingEpoch(), main(), AnnElasticConstitutiveLaw< T, Tder >::Update(), AnnElasticConstitutiveLaw< doublereal, doublereal >::Update(), AnnViscoElasticConstitutiveLaw< T, Tder >::Update(), and AnnViscoElasticConstitutiveLaw< doublereal, doublereal >::Update().

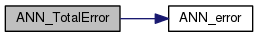

Definition at line 861 of file ann.c.

References ANN_error(), ANN_GEN_ERROR, ANN_OK, matrix::mat, matrix::Ncolumn, and matrix::Nrow.

Referenced by main().

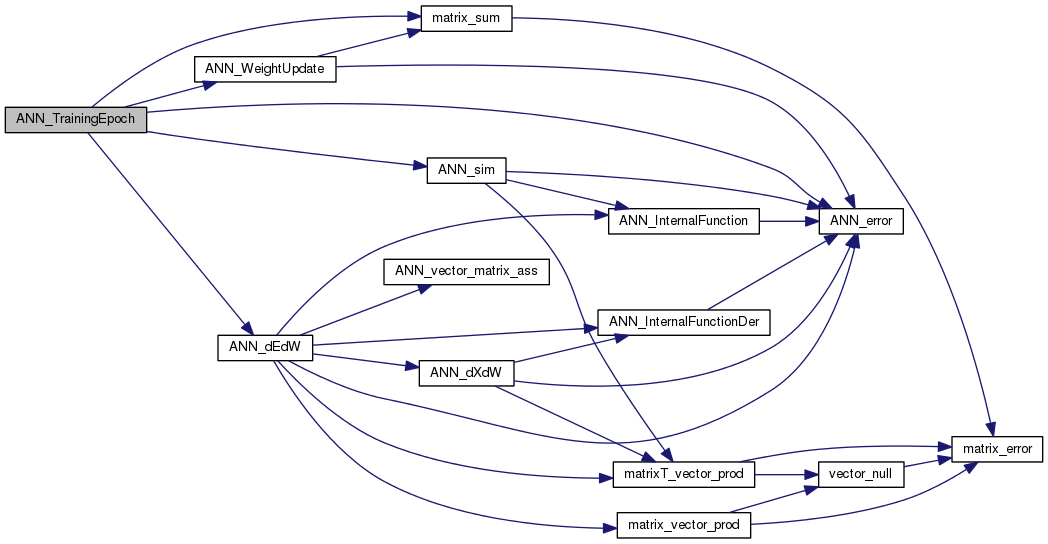

Definition at line 769 of file ann.c.

References ANN_dEdW(), ANN_error(), ANN_FEEDBACK_UPDATE, ANN_GEN_ERROR, ANN_MATRIX_ERROR, ANN_OK, ANN_sim(), ANN_TM_BATCH, ANN_TM_SEQUENTIAL, ANN_WeightUpdate(), ANN::dEdW, ANN::dW, ANN::error, ANN::input, matrix::mat, matrix_sum(), ANN::N_input, ANN::N_layer, ANN::N_output, ANN::output, and vector::vec.

Referenced by main().

| ann_res_t ANN_vector_matrix_ass | ( | ANN_vector_matrix * | , |

| ANN_vector_matrix * | , | ||

| int * | , | ||

| int | , | ||

| double | |||

| ) |

| ann_res_t ANN_vector_matrix_init | ( | ANN_vector_matrix * | , |

| int * | , | ||

| int | |||

| ) |

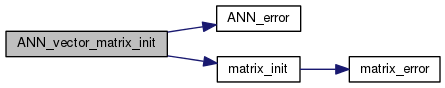

Definition at line 569 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_NO_MEMORY, ANN_OK, and matrix_init().

Referenced by ANN_init(), and main().

| ann_res_t ANN_vector_vector_init | ( | ANN_vector_vector * | , |

| int * | , | ||

| int | |||

| ) |

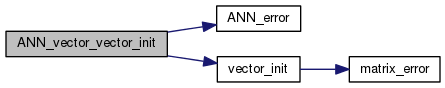

Definition at line 588 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_NO_MEMORY, ANN_OK, and vector_init().

Referenced by ANN_init().

| ann_res_t ANN_WeightUpdate | ( | ANN * | , |

| ANN_vector_matrix | , | ||

| double | |||

| ) |

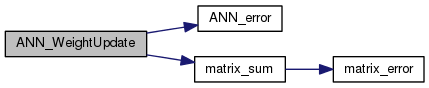

Definition at line 552 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_OK, matrix_sum(), ANN::N_layer, and ANN::W.

Referenced by ANN_TrainingEpoch(), and main().

Definition at line 341 of file ann.c.

References ANN_error(), ANN_MATRIX_ERROR, ANN_OK, ANN_W_A_BIN, ANN_W_A_TEXT, ANN::eta, ANN::input_scale, matrix_write(), ANN::N_input, ANN::N_layer, ANN::N_neuron, ANN::N_output, ANN::output_scale, ANN::r, ANN::rho, ANN::W, W_F_BIN, W_F_TEXT, W_M_BIN, W_M_TEXT, ANN::w_priv, and ANN::w_write.

Referenced by main().